Session Description

While digital technology has the potential to revolutionise healthcare, it can also exacerbate gender inequalities, deepen marginalisation, and enable human rights violations. In a world where our physical and digital identities are increasingly intertwined, bodily autonomy must include control over personal data – however, this remains elusive to women, girls and those identifying as other genders, especially in LMICs. This 90-minute webinar focuses on data governance and sexual and reproductive health (SRH) in the context of a rapidly evolving digital health industry, and privacy and data protection legislation that is struggling to keep up.

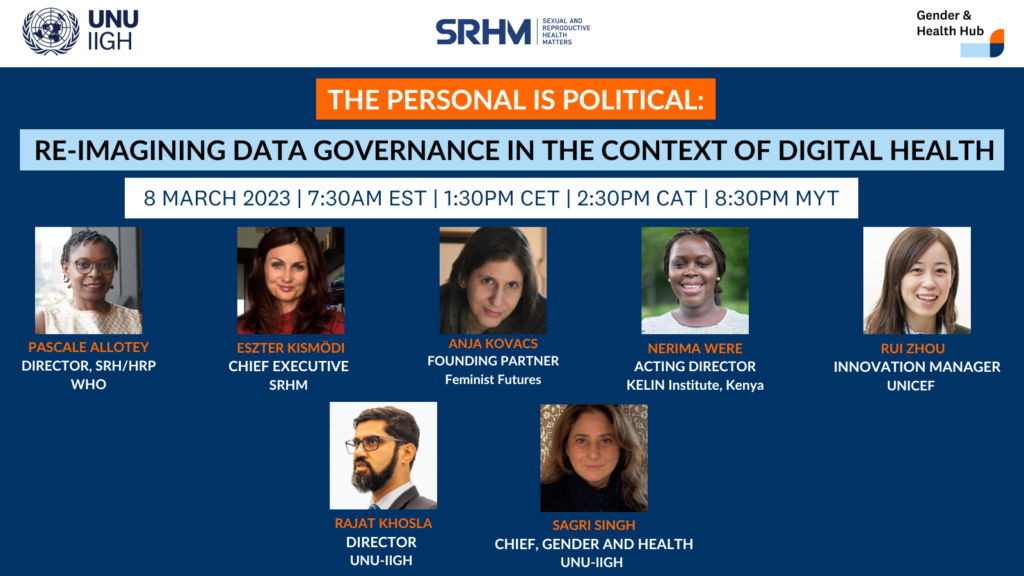

This event took place on Wednesday, 8 March 2023

Background

While digital technology has the potential to revolutionise healthcare, it can also exacerbate gender inequalities, deepen marginalisation, and enable human rights violations.

Digital technology has already had a proven, positive impact on Sexual and Reproductive Health (SRH) sustainably at scale in LMICs, and among those with access – the internet is a critical channel for accessing SRH information. Further, Artificial Intelligence (AI) offers great promise as a source of timely, personalised, confidential answers to women and girls’ SRHR questions and as a decision-support tool in the hands of frontline health workers and doctors.

However, optimism about the role of digital technologies – including AI – in accelerating improvements in SRH and protecting rights, are increasingly qualified by concerns about the unintentional reinforcement of social inequities, exacerbation of current gender and intersecting digital divides, encoding of biases, spread of misinformation, targeted harassment, and critically – the misuse of personal data due to the lack of effective mechanisms for protecting safety, confidentiality and privacy.

- Reinforcement of social inequities and encoding of biases: The gender divide in digital access, skill and use in LMICs means that most datasets that could power AI solutions are overwhelmingly male. Further, the voices of women, girls and gender diverse communities are underrepresented in key decision-making roles in the design of digital health interventions.

- Misinformation: AI – particularly AI powered search engines, have unprecedented power to disseminate misinformation with little accountability. The repercussions of false information about SRHR, especially abortion, can be serious, often fatal.

- Disinformation: Women are increasingly the target of disinformation campaigns about SRHR, and AI systems can be used as powerful disinformation tools.

- Lack of safe spaces and targeted harassment: while technology has been important for SRHR advocacy and movement organising both locally and globally – advocates, particularly women are increasingly targets of harassment. Research indicates that 73% of women across the globe have experienced TFGBV including cyberbullying, cyberstalking, defamation, image-based abuse, sexual harassment, doxing, gender-trolling, and hacking, and marginalized and disadvantaged targeted more (targeting by race, class, caste)

- Privacy and data protection: as the depth and breadth of personal data being generated, stored, and used grows exponentially, existing approaches to data governance have not kept pace. Now that data is famously the new oil, questions are being raised about how data is being used from: voice/speech recognition, video surveillance, customer service and marketing virtual digital assistants (VDAs), localization and mapping, and sentiment and human emotion analysis. Further, it is evident that seemingly anonymised personal data can easily be de-anonymised by AI and facilitate for tracking, monitoring, and profiling of people as well as predicting behaviours. Together with facial recognition technology, such AI systems can be used to cast a wide network of surveillance. All these issues raise urgent concerns about privacy. Critically, given that law is silent on many privacy issues, mere compliance with regulation, some of which may be significantly outdated and not aligned with technological advances, no longer suffices.

Key guiding questions

- What is the risk of AI developments racing ahead of privacy and data protection legislation, and the lack of PDP acts in most LMICs?

- Do commonplace understandings of consent unrealistically presuppose a subject capable of making a meaningful choice (see Hirschmann, 1992; Drakopoulou, 2007), and fail to account for—and hence legitimate—gendered power structures which shape the situation in which consent can be given (Lacey, 1998; MacKinnon, 1989; Pateman, 1988; Loick, 2019?

- How can data governance be reimagined – in consideration of the limitations of consent-based frameworks – particularly for low digital literacy and low-income groups?

- What would an effective and inclusive health data governance approach look like?

- How do reduce the factors that give a few corporations the power to undermine citizens’ ability to act autonomously for the protection of their personal data, particularly in the context of the growing role of private actors in public health systems? Could we replace the logic of data business for the logic of fundamental rights?

Outcomes

The webinar articulates how we can:

- Emphasise existing inequalities between different data subjects and specify in a more systematic and consolidated way that the exercise of data rights is conditioned by many factors such as health, age, gender or social status (Malgieri and Niklas, 2020, p. 2).

- Promote the widest possible public debate on the development of big data technologies and personal data protection regulations, even questioning its conceptual foundations.

- Work with public institutions to support this debate by providing mechanisms of transparency, external control and accountability of big data technologies and data-driven corporations.